Quantum Computing for High-Energy Physics: A Roadmap to the Future

The Science frontier talk to Computing frontier

The scientific article "Quantum Computing for High-Energy Physics: State of the Art and Challenges" published in PRX Quantum outlines the potential of quantum computing (QC) as a transformative technology in the field of high-energy physics (HEP). This comprehensive roadmap, led by a collaboration between CERN, DESY, IBM, and various academic institutions, addresses the current state and future challenges of integrating quantum computing into HEP research.

Overview

Quantum computing has garnered significant attention as a possible solution to computational problems that are infeasible for classical computers, especially in fields requiring immense computational resources like HEP. The article explores how quantum computers, with their unique capabilities, could address some of the most challenging problems in HEP, such as simulating complex quantum systems and analyzing vast amounts of data from particle experiments.

The Potential of Quantum Computing in HEP

One of the most promising applications of quantum computing in HEP lies in its potential to achieve quantum advantage, where quantum computers can outperform classical ones by a significant margin. This could revolutionize numerical simulations in theoretical physics, particularly in scenarios that are currently unapproachable with classical methods due to their complexity.

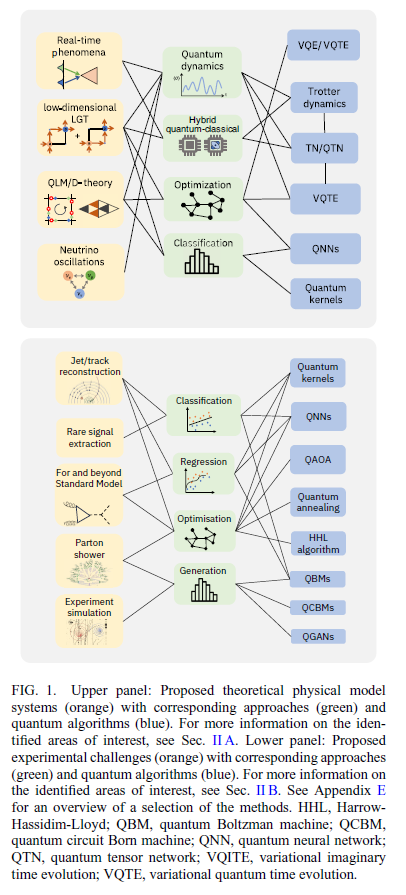

The article highlights two main areas where quantum computing can make an immediate impact:

Theoretical Modeling: Quantum computers could be used to simulate real-time dynamics of quantum field theories and other complex systems that are intractable with classical computing methods. For example, lattice gauge theories, which are crucial for understanding quantum chromodynamics (QCD) and other aspects of particle physics, could be simulated more efficiently using quantum algorithms.

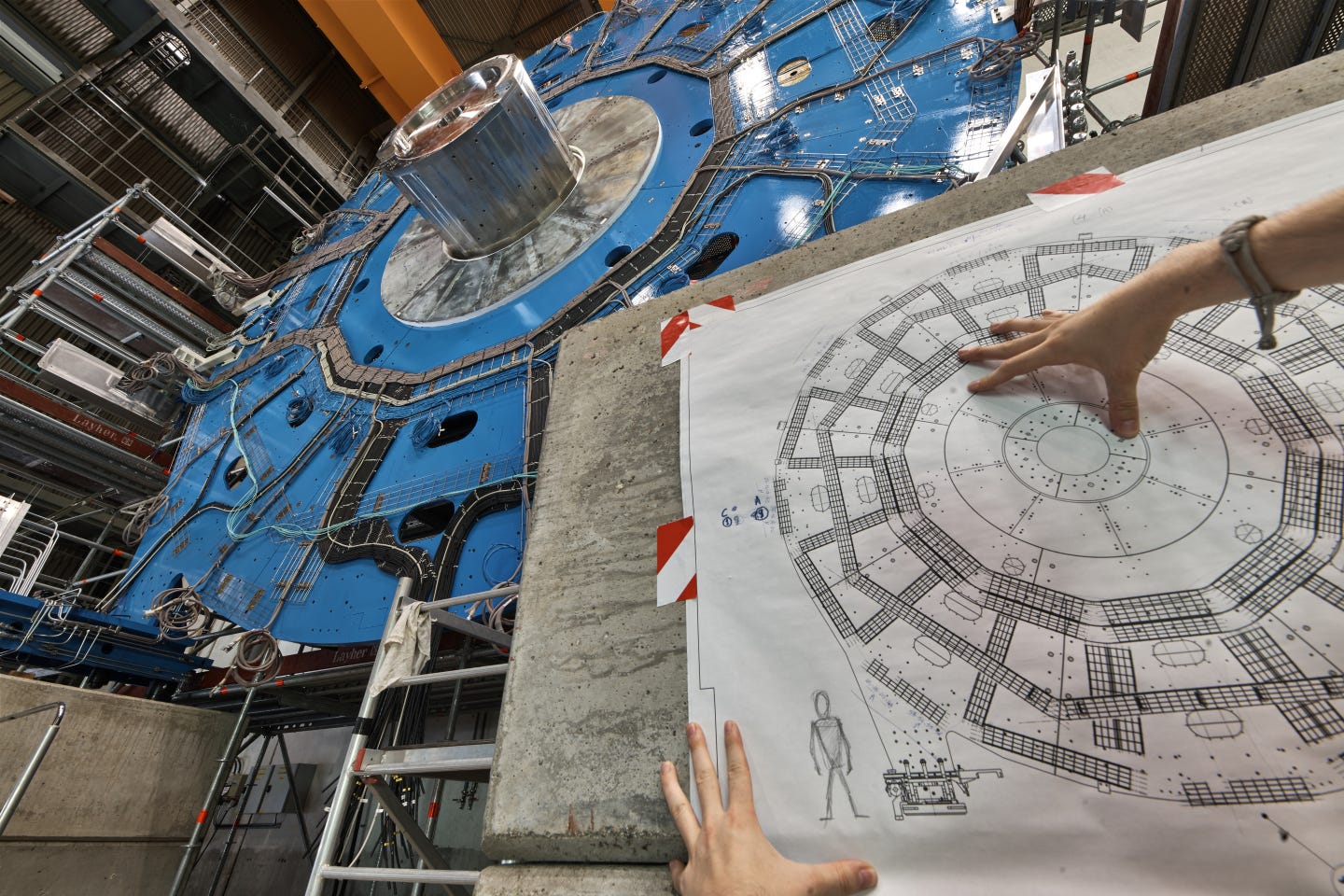

Experimental Data Analysis: The analysis of experimental data, such as that produced by the Large Hadron Collider (LHC), could be greatly enhanced by quantum algorithms. These algorithms can potentially handle the vast amounts of data and complex correlations found in particle physics experiments more efficiently than classical algorithms.

An example : D-Theory Overview

D-Theory, or Dimensional Reduction Theory, is an alternative formulation of lattice field theory, where continuous classical fields are replaced by discrete quantum degrees of freedom. This theory is particularly useful in studying quantum field theories, such as those encountered in high-energy physics, by reducing the number of dimensions in which the problem is studied. The idea is that in D-Theory, these quantum degrees of freedom, which live in a higher-dimensional space, undergo a process known as dimensional reduction to describe a lower-dimensional quantum field theory.

Key Concepts of D-Theory:

Quantum Links: Instead of using continuous fields as in traditional lattice gauge theory, D-Theory uses discrete quantum variables, known as quantum links. These quantum links are operators that reside on the links of a spatial lattice, representing the gauge degrees of freedom.

Dimensional Reduction: The core idea is that the high-dimensional quantum system can effectively be reduced to a lower-dimensional one through this dimensional reduction process. For example, a 3D problem in standard lattice gauge theory might be reduced to a 2D problem using D-Theory.

Asymptotic Freedom and Non-Perturbative Effects: D-Theory is particularly interesting because it can naturally handle phenomena like asymptotic freedom and non-perturbative effects, which are crucial in quantum field theories like Quantum Chromodynamics (QCD).

Analog and Digital Quantum Simulators: These are essential tools in D-Theory for exploring the dynamics and properties of quantum field theories. They help simulate the complex interactions in a controlled environment.

Quantum Computing's Role in D-Theory

Quantum computing can significantly enhance the study and application of D-Theory in several ways:

Simulation of Complex Systems: Quantum computers can simulate quantum link models efficiently. Since these models involve quantum states with large Hilbert spaces, classical computers struggle with the exponential scaling of required resources. Quantum computers, however, can represent and manipulate these states naturally, providing a practical way to simulate D-Theory.

Real-Time Dynamics: One of the major challenges in classical simulations of D-Theory is capturing real-time dynamics, especially in non-perturbative regimes. Quantum computers can simulate these dynamics directly, offering insights into time evolution and scattering processes in quantum field theories.

Handling the Sign Problem: In classical simulations, certain configurations, especially those involving real-time dynamics or high fermion densities, suffer from the "sign problem," which makes calculations computationally infeasible. Quantum computers, however, are not hindered by this problem, allowing for more accurate simulations.

Efficient Resource Use: Quantum computers can encode quantum link models more efficiently, using qubits to represent quantum degrees of freedom. This reduces the computational complexity compared to classical approaches, making it feasible to explore larger systems and more complex interactions.

Exploration of New Phases and Phenomena: By using quantum computing to simulate D-Theory, researchers can explore new quantum phases and phenomena that are difficult or impossible to study using classical methods. This could lead to the discovery of new physical insights and enhance our understanding of quantum field theories.

In summary, D-Theory provides an innovative approach to studying quantum field theories by leveraging the power of dimensional reduction and quantum degrees of freedom. Quantum computing plays a crucial role in making the simulation and analysis of D-Theory feasible, opening up new avenues for exploring the fundamental laws of nature in high-energy physics.

Challenges and Research Directions

While the potential is vast, the article also outlines several challenges that must be addressed to realize the benefits of quantum computing in HEP. These challenges include:

Error Mitigation: Current quantum computers are prone to errors, and developing robust error mitigation techniques is crucial for reliable computations.

Scalability: Many HEP problems require simulations on large-scale quantum computers, which are still in the developmental stages. The article discusses the need for quantum hardware capable of handling hundreds of qubits and executing thousands of quantum gates reliably.

Algorithm Development: There is a need for developing quantum algorithms tailored to specific HEP problems. This includes algorithms for simulating quantum field theories, particle interactions, and data analysis.

The article also stresses the importance of platform-agnostic developments, ensuring that advancements in quantum algorithms and techniques can be applied across different quantum computing platforms.

Conclusion

The roadmap set forth by the authors aims to guide the HEP community in leveraging quantum computing to tackle some of the most pressing and complex problems in the field. By focusing on both theoretical and experimental applications, and addressing the associated challenges, the article lays the groundwork for future research and development that could lead to significant breakthroughs in high-energy physics through the use of quantum computing.

This collaboration between leading institutions in HEP and quantum computing exemplifies the interdisciplinary approach required to push the boundaries of both fields, potentially leading to discoveries that could reshape our understanding of the universe.